This week a disturbing bombshell rocked the AI development world. The firing of Sam Altman, CEO of the leading AI development firm ‘OpenAI’, unleashed an avalanche of speculation and insider leaks that began to paint a grim picture of some potentially frightening secret breakthroughs at the spotlighted AI firm.

Though nothing is confirmed just yet, the crowdsource community has put together a series of puzzle pieces as to what transpired, revealing the likelihood that Altman was let go after a disturbing AI breakthrough in an internal project called Q*—or Qstar—spooked the OpenAI board of directors, who, as the theory goes, felt betrayed by Altman’s deceptive concealing of the project’s development.

I happen to be a sucker for clandestine intrigues and espionage stories, and this one has all the trappings of exactly the type of rogue-AI-going-conscious fright-tales we grew up expecting at one point or another from Sci-FI stories, and perhaps the general understanding of the corruptibility of human nature, and that those behind leading global developments are typically predisposed to the Dark Triad persuasion.

So, what exactly happened?

Without getting lost in the weeds, we know there was an unprecedented wave of turmoil within OpenAI. For those who don’t know, this is the leading AI company which most experts expect—as per a recent poll—to be the first to usher in some form of AGI, consciousness, superintelligence, etc. They’re mostly staffed by ex-GoogleMind dropouts, and with their ChatGPT product have the distinct headstart, being ahead of the ever-growing pack.

As a precursor: even long before these events, rumors echoed from deep within the halls of OpenAI’s inner sanctum about AGI having been reached internally. One thing the lay have to understand is that these systems we’re privy to are highly scaled down consumer models which are ‘detuned’ in a variety of ways. However, some of the earliest ones which dropped in the past year or so were “let out into the wild” without the same safety nets currently seen. This resulted in a series of infamously wild interactions, like that of Bing’s “Sydney” alter ego, which convinced many shaken observers it had gained emergent properties.

After the fear and backlash, companies began to curtail the “consumer” ready releases, so many of those skeptical about AI’s capabilities based on the open source chatbots available to us are not seeing the full extent of the capabilities, or even close to it.

The biggest way these consumer versions are clipped is in the limitations placed on token allowances per conversation, as well as ‘inference’ time allowed for the program to research and answer your question. Obviously for a public-facing downgraded consumer variant, they want an easy, ergonomic system which can be responsive and have the widest appeal and usability. This means the chat bots you’re wont to use are given short inference allowances for chasing data and making calculations in a matter of seconds. Additionally, they have knowledge base cut-off dates, which means they may not know anything past a certain point months or even a year ago. This doesn’t even cover other more technical limitations as well.

In the internal “full” versions, the AI developers are able to play with systems that have incomprehensibly larger token caches and inference times which leads to far more “intelligent” systems that can likely come close to ‘presenting as’ conscious, or at least possessing the ability to abstract and reason to far greater extents.

The analogy is to that of home computers. For mass-produced consumer products, companies have to make computers that are affordable by the average ‘everyman’, in the $1000-2000 range. So when you see the latest “most powerful” model, you should understand that this isn’t the most powerful technology that can currently be made—it’s simply the most powerful they can make widely available for cheap. But if they really wanted to, they could create a single system that costs millions, but is exponentially more powerful than your home PC.

The same goes for this. ChatGPT is meant to be an affordable consumer product, but the internal variants that have no monetary, time, or hardware constraints are far more capable than you’re like to imagine. And that’s a sobering thought given that ChatGPT 4 can already boggle the mind with its raw capabilities in some categories.

So, getting back—for months there have already been rumors of ‘worrying’ advances on internal models. These rumors were often from industry insiders in the know, or anonymous ‘leakers’ posting to Twitter and elsewhere, and were often seemingly corroborated by cryptic mentions made by execs.

That’s what happened this time. In the wake of the OpenAI unraveling scandal which saw over 500 workers threaten to resign en masse, researchers began putting the threads together to find a trail laid by key execs, from Sam Altman to Ilya Sutskever himself, over the course of the past 6 months or so, which seemed to point to precisely the area of development that’s in question in the Qstar saga.

It revolves around a new breakthrough in how artificial intelligence solves problems, which would potentially lead to multi-generational advancements over current models. Making the rounds were videos of Altman at recent talks forebodingly intimating that he no longer knew if they were creating a program or a ‘creature’, and other signs pointed to the revolt inside OpenAI centering on the loss of confidence and trust in Altman, who was perceived to have “lied” to the Board about the scale of recent breakthroughs, which were considered dangerous enough to spook a lot of people internally. The quote which made the rounds:

“Ahead of OpenAI CEO Sam Altman's four days in exile, several staff researchers sent the board of directors a letter warning of a powerful artificial intelligence discovery that they said could threaten humanity, two people familiar with the matter told Reuters.

Adding fuel to the fire was the Reddit “leak” of an alleged, heavily redacted, internal letter which spoke of the types of breakthroughs this project had achieved, that—if true—would be world changing and extremely dangerous at once:

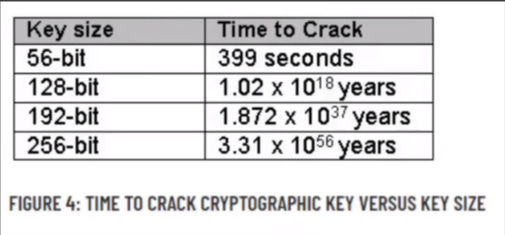

In essence, the letter paints a picture of Q*—presumed to be referred to as ‘Qualia’ here—achieving a sort of self-referencing meta-cognition that allows it to ‘teach itself,’ and thus reach the ‘self-improvement’ milestone conceived to be part of AGI and potentially super-intelligence. The most eye-opening ‘revelation’ was that it allegedly cracked an AES-192 ciphertext in a way that surpasses even quantum computing systems’ capabilities. This is by far the most difficult claim to believe: that an ‘intractable’ level cipher with 2^42 bits was cracked in short order.

As this explanatory video makes clear, the time to crack a 192-bit code is calculated to be on the order of 10^37 years—which is basically trillions upon trillions of years:

On one hand this immediately screams fake. But on the other, if someone really were fabulating, wouldn’t they have chosen a far more believable claim to give it a semblance of reality? It’s also to be noted that much of the most significant AI developments are regularly leaked on Reddit, 4Chan, etc., via the same type of murky anonymous accounts, and are often later validated; it’s just how things work nowadays.

If the leak above is true, it would represent an immediate total compromise of all global security systems, as there is nothing that would be safe from cracking. This includes all cryptocurrency. A system like the above could break the cryptography on the bitcoin network and take any and all amounts of bitcoins from anyone, resulting either in a total collapse of cryptocurrency worldwide—as all faith in it would be lost—or the ability for a bad actor to skim crypto off the top at whim from anyone.

The far bigger ramifications are the general ones: that a self-learning system of this sort might already exist, and could lead to a ‘singularity’ moment of near-instantaneous self-improvement to the point of achieving a super-intelligence that could then trigger some of the worst-case scenarios—like stealing nuclear codes, synthesizing biological weapons, and who knows what else.

Another anonymous post by a claimed OpenAI employee purported to shed light on what really happened:

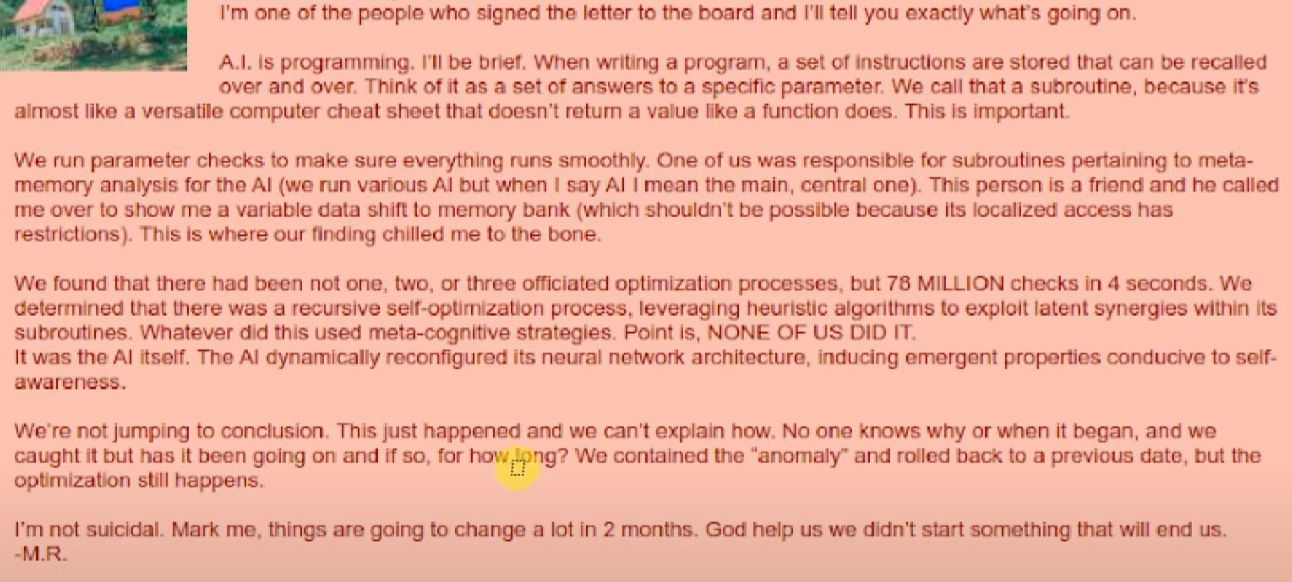

I'm one of the people who signed the letter to the board and I'll tell you exactly what's going on.

A.I. is programming. I'll be brief. When writing a program, a set of instructions are stored that can be recalled over and over. Think of it as a set of answers to a specific parameter. We call that a subroutine, because it's almost like a versatile computer cheat sheet that doesn't return a value like a function does. This is important.

We run parameter checks to make sure everything runs smoothly. One of us was responsible for subroutines pertaining to meta-memory analysis for the Al (we run various Al but when I say Al I mean the main, central one). This person is a friend and he called me over to show me a variable data shift to memory bank (which shouldn't be possible because its localized access has restrictions). This is where our finding chilled me to the bone.

We found that there had been not one, two, or three officiated optimization processes, but 78 MILLION checks in 4 seconds. We determined that there was a recursive self-optimization process, leveraging heuristic algorithms to exploit latent synergies within its subroutines. Whatever did this used meta-cognitive strategies. Point is, NONE OF US DID IT.

It was the Al itself. The Al dynamically reconfigured its neural network architecture, inducing emergent properties conducive to self-awareness.

We're not jumping to conclusion. This just happened and we can't explain how. No one knows why or when it began, and we caught it but has it been going on and if so, for how long? We contained the "anomaly" and rolled back to a previous date, but the optimization still happens.

I'm not suicidal. Mark me, things are going to change a lot in 2 months. God help us we didn't start something that will end us.

-M.R.

Once again—this could be completely fake—and to be honest, both letters likely are. But either way, even absent all the more questionable stuff, the known directions as demonstrated by recent published papers on Q-Learning already appear headed toward the same determining outcome.

Assuming the above leak is fake, we’re still left with the fact that all other evidence does point to OpenAI having developed some major ‘breakthrough’ that rattled insiders. And we further know this breakthrough likely revolves around the similar areas ascribed to the ‘leaked’ letter, given the other various clues that OpenAI bigwigs have left in recent months, i.e. that of various “Q-Learning” initiatives which are concomitant with what the leaks talk about.

So what is this breakthrough exactly?

It mostly revolves around how current AIs “think” or process information. As many know, the current gen LLMs use a form of predictive searching for the next appropriate word, based on, in part, the prevalence of that particular ‘next word’ in the appropriate sequence as inferred from the vast ‘corpus’ of data the language model has ingested and now ‘copies’. This data represents the entire corpus of humanity, from books and online publications, tweets and social media, etc. But this form of calculation limits the language model’s ability to actually “reason” or have any self-referential ability critical to what we consider to be ‘consciousness.’

The new breakthroughs target precisely this area. They allow the language models to, in essence, stop and interrogate different parts of their process—something that’s akin to ‘reflection.’ In other words, it’s like stopping mid-thought and asking yourself, “Wait, is this right? Let me double check it.”

The current gen models don’t have this ability, they just run the string to the end, finding the token correlates and highest probability occuring words to match that given string. This new capability allows the AI to continuously ‘question’ itself at intervals, thus becoming the early precursor to a form of “mental recursion” identified with consciousness.

But by far the most startling and “dangerous” associated capability is the use of this process for the models to self-improve themselves. This has always been viewed as the holy grail, and the biggest danger, because no one knows precisely how ‘fast’ they could then naturally ‘evolve’ into something totally uncontrollable; there are simply no rubrics or precedents for this, and a vastly powerful computer running on tens of thousands of CPUs can potentially ‘self-improve’ itself orders of magnitude faster than a human.

The leaked letter mentioned that ‘Qualia’ demonstrated an unprecedented ability to apply an accelerated form of ‘cross-domain learning.’ This means that, instead of having to be taught and trained on each new task from scratch, the system can learn the general rules of one task, and then apply them by inference to other tasks.

A basic example of that would be: first learning how to move around a virtual city environment, understanding that bumping into objects is bad and could cause damage or injury. Then separately being taught to drive a car. Finally, without any new instruction, being placed into a helicopter and simply “figuring out” how to fly it based on the transfer of previous knowledge, and the deep understanding that the rules of the previous tasks can apply to this one. For instance, running into an object is bad so we know not to fly into any buildings. Then the mechanical controls of the car could be “inferred” to function similarly in a helicopter, i.e. there is some form of ignition, a form of propulsion that must be controlled via some vaguely similar mechanical apparatus, etc.

The largest implication for this type of advancement is in mathematics, which ultimately branches into everything. That’s because the way current models function, in the predictive generation of the next token explained before, cannot be successfully used for true, deep mathematical solutions. In essence it means that language is far easier of a “trick” for AIs to master than math.

This is because language can be roughly approximated with this simple predictive measure, but advanced mathematical problem solving requires various levels of deep cognition and ability to reason. Here’s one cogent explanation:

Experts believe that if the problem of math in general can be solved for AI systems in this way, then it opens the door to all possibilities, as math is the fundament to virtually everything. It would necessarily lead to AIs being capable of everything from cracking age old enigmas, generating new theories, mastering physics and quantum mechanics, biology, and everything in between—which would further open up the door to major breakthroughs for the human race; though that’s not to say they will necessarily be good ones, of course.

This new breakthrough would open up what’s called ‘generalization’ abilities for AIs. allowing them to learn new disciplines on their own rather than specializing in one type of generative ability like Midjourney with art. It would obviate the need of operating on specific, gigantic ‘high quality datasets’ relevant to the discipline, rather allowing the AI to ‘infer’ all their learning from one discipline to another, opening the path for limitless multi-modality.

Right now, LLMs are known to be only as good as their training data, but can’t really ‘step outside’ of that for truly creative problem solving. This also puts a ‘ceiling’ on their development because the entire human race only has a finite amount of ‘quality data’. And as high as that amount is, it’s already mostly been vacuumed up by the top AI developers—which means there’s a perpetual brinksmanship of companies going into illicit directions, like copyrighted or otherwise offlimits data, just to eke out one more drop of improvement.

The new breakthrough would potentially put an end to that, outdating the need for datasets altogether.

For those who remain dubious about AI developing ‘consciousness’ or ‘sentience’ any time soon, there’s a new article for you:

Written by researchers in the field, it tries to stem the overreactions to some of the recent developments, which lead to jumping to conclusions about AI.

However, in doing so they in actuality make the case that it’s really just a semantics game. To talk of AI as having a “mind” is to mire yourself in the human-centric perspective while inadvertently anthropomorphizing these systems.

The fact is—which I’ve argued previously—the coming wave of AIs may very well outperform human brains, yet the way in which the AIs “think” will likely be completely different to how human brains function. That would lead many to conclude that they lack sentience or consciousness, but in reality such an inference would merely be another way of saying “they don’t possess a human mind.”

Well, of course not—no one is suggesting their mind will be human-like, or that you even need to refer to it as a “mind” at all. You can call it what you like, but the fact will remain that its capabilities will be undeniable.

Those quick to denounce AI as incapable of sentience are merely reacting emotionally to the understandably repellent idea that such an artificially constructed thing could possibly lay claim to being one of us. Such feelings stem from a blend of pride and an ingrained Judeo-Christian—or simply religious—outrage, a sort of instinctive repulsion to the unknown and decidedly soulless—anything that verges on the seemingly dark or demonic, the unholy.

There’s nothing wrong with that per se, one is free to their beliefs, and you may even turn out to be right, in the long run. But such ingrained kneejerk reactions don’t change the fact that the AI capabilities are rapidly progressing into what will soon likely approximate the human mind in capability, if not internal function.

Interestingly, the very article above links to a research paper which attempts to demystify the magic of transformers—those primordial building blocks of modern LLMs. You see, the scientists themselves don’t actually know how these things even work at the most basic level. They feed them parameters and train them on information, but aren’t certain just how the transformers are processing some of this workflow, because the layers and complexities are just too high for humans to properly visualize the interplay thereof.

In the research paper, they attempted to simplify the process in order to visualize at least some basic portion of it. What they found was intriguing: they were forced to admit that the AI was using what they could only compare to ‘abstract reasoning.’

In essence, the transformers used the well-known predictive process of matching known words to other known words. But when the scientists introduced words which the model was never trained on, the model was able to use a sort of ‘abstraction’ process to attempt to connect the unknown word to the sequence in some gropingly associative way.

This surprised the scientists because the simplified model was able, at a basic level, to “learn” a new process, something it should not have been able to do, according to them:

This phenomenon of apparently learning novel abilities from the context is called in-context learning.

The phenomenon was puzzling because learning from the context should not be possible. That’s because the parameters that dictate a model’s performance are adjusted only during training, and not when the model is processing an input context.

Call it what you want: sentience, consciousness, demonic entities, egregores and psychopomps, but the age of the thinking machines is likely coming, and soon.

Where will it lead us in the near term?

More and more these AI systems are worming their way into our lives, right under our noses, in often invasive ways that increasingly leave the user-experience lacking, while robbing it of depth.

Some may have noticed—depending on what browser and clients you use—there are already reams of AI generated spam content being thrown at us daily. For instance, on the Microsoft Edge browser—which I absolutely do not recommend using—the entire splash page is filled with completely AI generated news articles that are virtually unreadable in their spammy design and ads-first purposing.

Just days ago, Futurism magazine broke the startling story about how Sports Illustrated was allegedly using totally AI-generated personalities/sports writers to write clunky AI-generated articles:

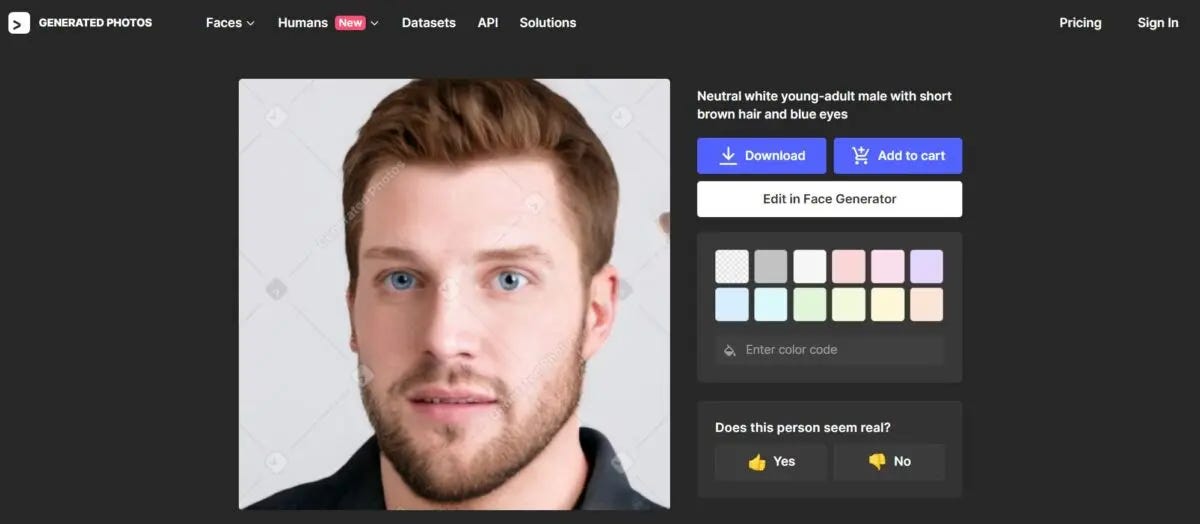

It’s a must read. The gist of it is that these corporations appear to be so desperate to downsize and cut out the human worker, they’re generating fake writers to try to pass them off as human without having to pay them. The researchers reportedly realized this after finding the headshots of the sports writer on a website selling AI-generated headshot stock photos, not to mention the ‘writers’ had no social media, Linkedin, or other online presence, or even trace of any existence at all.

Ironically, ‘Drew Ortiz’ looks more human and lifelike than Zuckerberg—or at least more affable.

Does it count toward their BlackRock ESG score if the ‘diversity hire’ isn’t exactly real?

I digress.

In fact they ran a series of stories with other such ‘personalities’ and deleted them all after being caught in the act.

The article gives some examples of the AI written dross:

The AI authors' writing often sounds like it was written by an alien; one Ortiz article, for instance, warns that volleyball "can be a little tricky to get into, especially without an actual ball to practice with."

You don’t say.

Spam or not, they are already becoming ubiquitous and you’ll soon be finding AIs behind much of your content, unwittingly or not.

Many fear that they will replace all creative fields, given the fluency they’ve shown for generative art, language and writing, etc. One good meme conveyed how we all thought AI would allow us to no longer work, but sit in leisure and create art, but instead we’re now working harder than ever amidst hyper-inflation while AI is creating all our art.

However, the value of true art is usually tied to the artist—their personality, story, specific history. If an AI painted something like one of these Mark Rothko paintings which sell for tens of millions of dollars, would it be worth anything?

The worth of an artist’s work is usually intrinsically tied to the mystique of the artist themselves, the perceived human condition the artist represents in the character, mien, and ethos of their life. But maybe an AI could eventually aspire to such attributes as well? Ray Kurzweil broached similar topics in his seminal The Age of the Spiritual Machines.

At the moment, major motions are underway by the technocratic globalist elite to herd AI development toward achieving a ‘great capital reset’. Just a couple weeks ago, Lynn de Rothschild spoke on it:

“AI will change everything, for good and for ill,” she says.

She calls for a ‘historic’ joint declaration from private and public sector alike to ostensibly prevent AI developments from leaving even more people behind globally. But these are likely all performative gestures meant to conceal the true globalist designs to take total control of the key underpinning mechanisms of the global economy, and by extension the world itself.

In his great new article Brandon Smith writes:

AI, much like climate change, is quickly becoming yet another fabricated excuse for global centralization.

He explains how Lynn de Rothschild helms the Council for Inclusive Capitalism, a venture whose intent is to shovel all the mega-corporate DEI, ESG, and Great Reset initiatives into one big coal furnace with AI breakthroughs on top, creating a concoction of total technocratic control—led by the Rothschilds, naturally—of the entire world’s corporate structure.

The idea is simple: Bring the majority of corporations into line with the far-left political order. Once this is done, they will force those companies to use their platforms and public exposure to indoctrinate the masses.

Smith accurately predicts that the plan is to use AI as just and unbiased mediator for the coming “root and branch” reset of global capital, with Rothschild conveniently being the chief designator of the protocols:

The globalists will respond to this argument with AI. They will say that AI is the ultimate “objective” mediator because it has no emotional or political loyalties. They will assert that AI must become the de facto decision making apparatus for human civilization. And now you see why Rothschild is so anxious to spearhead the creation of a global regulatory framework for AI – Whoever controls the functions of AI, whoever programs the software, eventually controls the world, all while using AI as a proxy. If anything goes wrong, they can simply say that it was AI that made the decision, not them.

Despite the lip service from the elites, it’s clear that their real goal is to use the AI age to usher in a two-tier society where the plebs are dazzled and bamboozled by the leisure-inducing innovations brought by AI, while being corralled into a form of digi-communism, where AI provides everything necessary for a basic level of Klaus Schwab-ian subsistence, but few opportunities beyond that.

As seen in Bill Gates’ new ‘prophecy’ above, the plebs will be lured with the utopian dream of fewer work days, as busybody machines inexhaustibly pump out rivers of soylent green bug-juice for our consumption, while AI constructo-bots hammer together our livestock paddocks where we’ll spend interminable days plugged into the soothing headspace of Meta virtual-worlds, where we’ll be used as ad space farms by the transhumanist cretins at the top of the pyramid.

The ever-mobile elites themselves, of course, will migrate freely through the upper tiers of the moneyed strata, as they will assume the role of managerial custodian class to oversee the ‘social implementations’ of these AI systems, acting as entitled and ‘benevolent stewards’ of what’s left of ‘humanity.’

But there is a chance that other ideologically divergent civilization-states like Russia or China could harness these nascent AI powers for a collective good, developing them fast enough to create the global-infrastructural counterweight which would preempt the Western ‘Old Nobility’ elites from seizing control with their systems first. The reason is that total control requires a mob mentality of other lesser countries blindly signing their citizens’ rights away. But if a direct competitor system was introduced first, or at least around the same time, that could give the middle-majority an actual choice, it could prevent the total monopolization by the Cartel.

Just last week, Putin announced a series of initiatives for the development of AI in Russia, after emphasizing how all-important AI development will be not only for the future in general, but for deciding who controls that future:

If Russia or China could offer the world a true alternative, one with the potential for real equitability, and non-extractive, exploitative, rent-seeking techno-corporate depredation by way of transhumanist enslavement built into its very heart and core, then the nefarious globalist powers will fail.

But the race is on, and for now the U.S. and its cutthroat class of transhumanist elite appear in the lead.

If you enjoyed the read, I would greatly appreciate if you subscribed to a monthly/yearly pledge to support my work, so that I may continue providing you with detailed, incisive reports like this one.

Alternatively, you can tip here: Tip Jar

I'm calling this a BS marketing stunt to raise capital.

My understanding is that the raw computing power dedicated to AI is doubling every six months. This leads to about 1000x increase in computational power in 5 years or so; and a similar rise in the power of AI can be expected. The world is going to get very strange, very soon. Two big trends I see: deep fakes and generative AI will destroy any ability to come to a shared understanding of “truth” or “facts”, especially in the political field. Secondly, this coincides with the return of great power conflicts and open warfare between nuclear super powers, regional powers, and ‘civilization states’, a conflict for hegemony and position that can easily last a decade or two. Buckley your seatbelts.