Discover more from DARK FUTURA

AI is being increasingly used to steer us through the consumerist-industrial-complex loop in order to maximize profits for the parent corporations. Corporations pour billions into scraping every digital cell of data to track our every movement, thought, and inclination with as much fine-grained manipulation as possible. These digital ‘cookies’ have grown increasingly complex to form entire profiles of us: our every proclivity, our schedules and biorhythms.

This is all done with a growing sophistication to which AI is becoming central. Trackers that can map out your every move to develop a more ‘intelligent’ assessment, veering on psychological profiles, based on your habits. And the type of data they can synthesize into usable metrics becomes ever-more surprising, tracking our nomadic drifts across the digital landscape merely by matching the metadata of offbeat markers like our phone’s battery percentage, or other unique identifiers that do not—on the surface—appear to break user anonymity. AI wrangles the disparate data markers into a predictive profile of our habits, whose accuracy grows with each iteration, swamping us with the consumerist blight of targeted “opportunities”.

As AIs get progressively autonomous, transitioning to acting as our assistants, agents, and digi-butlers, they will begin to play a role in our actual decision-making process in the digital consumer marketplace. If one takes the thought far enough, at some point we are essentially left with robots buying and selling: a sort of simulacra economy where we may be pushed progressively out of the loop. Eventually, our digi-nannies may de facto take over our household decision-making, going as far as not only suggestive nudging, but even outright autonomous management of our day to day duties.

The next phase of AI innovation lies in exactly that, and it is what currently occupies most of the experts in the field: the advent of the full-time AI “agent” as personal assistant who handles not only menial and trivial tasks, but even monetary expenditures.

Here’s a new talk from a recent symposium, as example:

“What we want to do is get to a point where we can trust these agents with our money, so they can do things on our behalf.” LLMs as “kids with your credit cards.”

The presenter affirms that the development of these agents marks the industry’s next big frontier, and will come in two general stages. The first is the natural evolution of the SIRI model, where the agent will begin to ‘act’ per our instructions, rather than merely retrieving information, saving notes and shopping lists, etc. But the evolutionary step beyond that will see these agents turn a page from mere subserviant ‘butlers’ into what the presenter dubs the ‘holistic AI’.

These will be agents that don’t just carry out our instructions, but actively take part in the decision-making process by either presenting us different choices to what we may have considered, or simply “holistically” understanding the needs underlying our request so as to potentially modify the retrieval of information or completion of the task in such a way as to suit us better than we would have known to do ourselves. For this, the AI will have to know us better than we know ourselves.

The cited example goes: if we request for the agent to book us flight tickets to LA, the ‘holistic AI’ may first ask us why we want to go there, and at that particular time. Based on our response, it may offer us new, novel, or interesting modifications, or even alternatives. For instance, you may respond: “I wanted to attend the jazz festival in LA.” And the AI will be able to recommend an even better festival elsewhere that you hadn’t known about.

The issue with this—which the presenter does point out—is that these agents will have to know us…intimately in order to be effective; which means they will require total access to our lives and all of our data. The same thing goes for carrying out tasks: you can’t expect an agent to competently keep your accounts if it doesn’t have total access to those personal accounts and services, like your banking portals, etc. And given that these AIs will be nigh-conscious, or at least autonomous to high degrees, it is like having a person of questionable trustworthiness or integrity having access to your most crucial information.

Of course, the techno-whizzes propose various ideas for building safety backstops into the systems, but it is nevertheless the chief natural concern on everyone’s mind. As recent examples, there have been at least two cases of AI language models which were at least claimed to have gone haywire or rogue, and “leaked” confidential users’ personal files.

I find myself in the slightly uncomfortable position of being predisposed toward a techno-skeptic complexion, and having never entrusted my ‘security’ to the various new-age verification mechanisms, be they palm, fingerprint, or iris scans, yet remaining cautiously fascinated by ongoing technological developments. I see AI as a natural and irrepressible stage of human evolution—one pointless to resist, because it will happen whether we like it or not. However, I stand by the conviction that these developments should be made as optional to society at large as possible, and that all people should retain a healthy skepticism and mistrust of these systems, particularly knowing the types of dark-triad-leaning personalities driving the tech startup scene.

The past week has seen the first major announcement in the field of these new agents: the Rabbit R1 device. It is designed precisely around the push over pull concept, claiming to revolutionize LLMs (Large Language Models) into their proprietary mode of LAM: Large Action Model.

The purpose of the device is akin to a handheld “SIRI”, but one that can actually competently complete novel tasks for you—like booking airline tickets, filling out online forms it has never seen before, etc., rather than simply retrieving information.

Watch below:

If you take this idea far enough, one could imagine the slow precipitous slide down the slippery slope of our AI virtua-agent becoming, in effect, a facsimile of…us. You may be skeptical: but there are many ways it can happen in practice. It would start with small conveniences: like having the AI take care of those pesky quotidian tasks—the daily encumbrances like ordering food, booking tickets, handling other financial-administrative obligations. It would follow a slow creep of acceptance, of course. But once the stage of ‘new normal’ is reached, we could find ourselves one step away from a very troubling loss of humanity by virtue of an accumulation of these ‘allowances of convenience’.

What happens when an AI functioning as a surrogate ‘us’ begins to take a greater role in carrying out the basic functions of our daily lives? Recall that humans only serve an essential ‘function’ in today’s corporatocratic society due to our role as liquidity purveyors and maintainers of that all-important financial ‘velocity’. We swirl money around for the corporations, keeping their impenetrably complex system greased and ever generating a frothy top for the techno-finance-kulaks to ‘skim’ like buttermilk. We buy things, then we earn money, and spend it on more things—keeping the entire process “all in the network” of a progressively smaller cartel which makes a killing on the volatile fluctuations, the poisonous rent-seeking games, occult processes of seigniorage and arbitrage. Controlling the digital advertising field, Google funnels us through a hyperloop of a small handful of other megacorps to complete the money dryspin cycle.

These ‘digital assistants’ of ours can gradually transition to automating our lives such that ads may one day target them rather than us. It may be a different form of ad, of course: we can’t assume the same ‘psychological’ mold for our surrogates. But this leads to the critical part of the thought experiment: what happens when the machines begin to insert themselves directly in between us and the System, taking our place as full intermediaries in the consumer loop?

Example: an agent can write articles, as they’re already semi-competent at. When those articles begin generating money for you in an increasingly autonomous way, out of convenience the agent may be asked to begin spending that money on your behalf, to purchase essentials or even recreational items for you. When tasked with choices for those purchases—between similarly priced items, for instance—the agent will have to rely on advertisements—it is to be assumed—just as we do, in differentiating between products, and coming to a decision on the best one.

At what point in such a progression does the agent effectively become a surrogate of ‘us’ and take us entirely out of the consumerist loop? What purpose would a corporation have in targeting ads at us rather than directly at our agents, who are making the primary decisions on our behalf in order to liberate us from time-consuming mundanities? And if such a point comes, where do we even stand as humans—what purpose do we serve any longer? Will it only remain for us to be quietly ‘shuffled off the stage’?

As a tangent: what would the legal ramifications for that even be? An AI autonomously generating revenue would technically qualify it to be a taxpayer, at which point it would need an EIN (Employee Identification Number), not to mention the threshold of discussions around ‘personhood’ and its natural extension: individual rights, amongst other things. Furthermore, what would be the legal implications for liability? Can the AI be sued for fraudulent or harmful output? Who is to be held accountable for its mistakes? To some extents we are already dealing with that when it comes to self-driving cars and the hazy legal liabilities thereof.

It will get even hazier when we reach such a level of agent autonomy that they can begin to totally replicate our personas and corporeal presentations such that they could act as ‘stand-ins’ for us. This could potentially be used during transactions or interactions, be they business, personal, or recreational. For instance: in an increasingly VR/AR augmented world the AI could assume our faces, which can be superimposed over any android body or digital avatar.

Here is Russia’s Dmitry Peskov’s amusement at being Deepfaked into Elon Musk in real time at last month’s Russian tech fair:

You can easily imagine the incredible things it can be used for.

Deepfakes in general have been getting increasingly convincing, particularly their ability to synthesize and replicate voices. Two recent examples of the new mouth-dubbing and voice-replicating tech:

Will we reach a point of ‘borrowed’ and temporary identities? If the AI can impersonate us to a 99.9% degree of imperceptibility, what would stop them from being employed as “us” to at least some limited extents—perhaps even ‘sharing’ the identity at whim? Will the future feature some sort of co-habitation of identity, when humans choose to merge with their AI ‘agents’ to become truly transhumanist variants of Human 2.0? That’s not to even mention rogue AIs impersonating us wantonly, for hostile and devious ends.

At the 2023 WEF conclave in Davos they imagined how in the near future our “brain waves” will be synced to our employers, allowing them to monitor our mental performance and alertness:

(Full video here.)

The full video goes on to say that, “what we think and feel is just data…that can be decoded by artificial intelligence.”

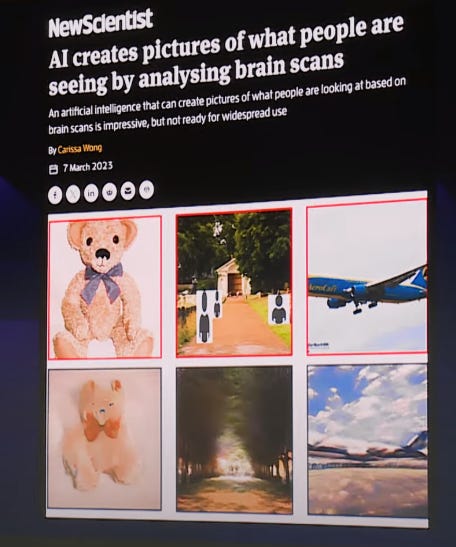

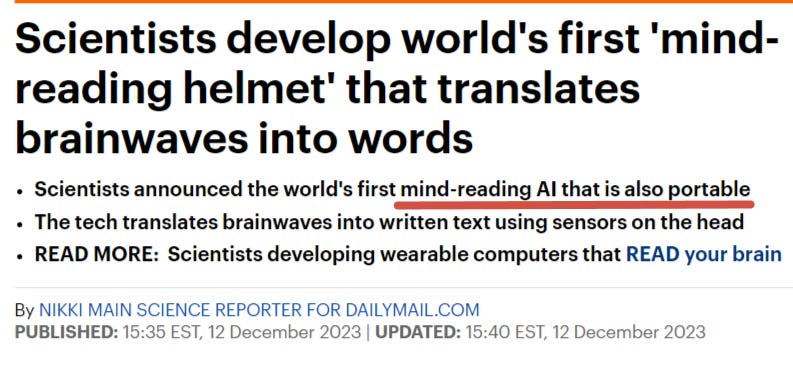

It is clear that our central planners intend to hook us up to brain-reading AIs that could end up functioning in some of the ways described in the opening. At first it may take ‘innocuous’ forms, like monitoring of activity. But eventually the mechanisms will invariably be switched from passive to active, nudging, interfering with, or outright supplanting our own brain activities. If you think this is the realm of science fiction, the latest updates have already brought us a mind-reading AI that can decode thoughts un-intrusively, with a mere outer cap worn on the head:

They claimed only a 40% accuracy for now, but that will likely improve in time. How long until it can go from pull to push, planting thoughts and coercive “impulses” for subliminal actions? And how long before it is AI that is working as ‘choir director’ inside our skulls in this way?

Jimmy Dore just featured an entire episode on Mark Zuckerberg’s announcement that in the future “Facebook will be powered by telepathic thoughts.”—an idea so creepy it must be seen to be believed.

“Facebook users in the future will share telepathic thoughts and feelings to each other. You’re going to just be able to capture a thought, what you’re thinking or feeling in kind of its ideal and perfect form in your head, and be able to share that with the world in a format where they can get that.”

But here’s the kicker:

Woah, there.

This is in full accordance with Klaus Schwab’s infamous WEF diktat that the future will have “no need for elections, because we will already know how everyone will vote”—via the same “brain implant” idea.

It becomes painfully clear where the tech-cartel is taking these technologies. It is part and parcel of the Coudenhove-Kalergi plan which predated the EU and drew a blueprint for the future of humanity under one centralized global government.

One obvious danger is that the new AI developments can help get us there in a surprising variety of ways: either by controlling or monitoring our thoughts as per the above, or by simply obsoleting us by taking all our jobs, leaving biological humans disenfranchised and dependent on the almighty government’s UBI subsidies.

This classic WIRED article from the turn of the century, April 2000, captured the zeitgeist well in prognosticating the future that awaited humanity with the advent of AI:

On the other hand it is possible that human control over the machines may be retained. In that case the average man may have control over certain private machines of his own, such as his car or his personal computer, but control over large systems of machines will be in the hands of a tiny elite—just as it is today, but with two differences.

And again, the kicker:

One of the prime issues of our time is that AI is increasingly being sold as an elixir for fundamental societal problems. But these problems are not actually being addressed or solved in any way, but rather ‘papered over’ by technological hand-waving.

For instance, proponents often extol how ‘digital assistants’ can offer succor and comfort to the lonely and old, people wasting away in the confining solitude of sterile nursing homes, who may lack families and friends to care for them. But this ignores the very fundamental causes at the root of the issue. To use AI merely to cover for major structural deficiencies in our increasingly perverse and unnatural social nomos seems grossly irresponsible. There is a reason an increasing lot of the aging population is dying alone; there are structural problems that stem from cultural ills responsible for things like the breakup of families, or the young generation abandoning their grandparents to die alone in nursing homes because they consider their views ‘toxic’ and ‘dated’.

Thanks to social engineering and the deluge of mental-programming directives from TikTok central, society is being inured with cultures and customs inimical to healthy social thriving. But instead of addressing this, we will just give you a robot to comfort your pain—and perhaps eventually help you “pass with dignity” via your very own sarco pod.

The new article above goes into the exploding business trend of AI romance avatars, digi-girlfriends, and the like:

Ads for AI girlfriends have been all over TikTok, Instagram, and Facebook lately. Replika, an AI chatbot originally offering mental health help and emotional support, now runs ads for spicy selfies and hot role play. Eva AI invites users to create their dream companion, while Dream Girlfriend promises a girl who exceeds your wildest desires. The app Intimate even offers hyper-realistic voice calls with your virtual partner.

With only a minor monetary ‘upgrade’ you can have your digi-soulmate switch to NSFW mode and stroke your ego with a heightened sense of feigned adulation:

Of course, most people are talking about what this means for men, given they make up the vast majority of users. Many worry about a worsening loneliness crisis, a further decline in sexual intimacy, and ultimately the emergence of “a new generation of incels” who depend on and even verbally abuse their virtual girlfriends. Which is all very concerning. But I wonder, if AI girlfriends really do become as pervasive as online porn, what this will mean for girls and young women who feel they need to compete with this?

Ah, yes: verbal techno-misogyny, the greatest threat facing our world. How soon until AI bots are classified as an ‘underprivileged and marginalized’ protected class, to be granted favorable judgment against its accusers and abusers, and used to further shame and subjugate the dwindling ‘extremist cismale’ underclass?

The article at least outlines the larger problem, and offers a perhaps idealistic smidgen of hope:

The only faint glimmer of optimism I can find in all this is that I think, at some point, life might become so stripped of reality and humanity that the pendulum will swing. Maybe the more automated, predictable interactions are pushed on us, the more actual conversations with awkward silences and bad eye contact will seem sexy. Maybe the more we are saturated with the same perfect, pornified avatars, the more desirable natural faces and bodies will be. Because perfect people and perfect interactions are boring. We want flaws! Friction! Unpredictability! Jokes that fall flat! I hold onto hope that someday we will get so sick of the artificial that our wildest fantasies will be something human again.

Papering over humanity’s problems with AI is akin to leftist cities handing out fentanyl doses to tide over waves of addicts—it’s simply not addressing the structural issue at its root. That is likely because the policy-pushers populating the tech industry are often silver-spooned sociopaths detached from real humanity and wouldn’t understand the first thing about addressing core sociological issues; for them, humanity remains merely a fallow field for the plowshare of consumerization and commodification.

The other driver of agent-specific development steering things into frightening directions is none other than DARPA.

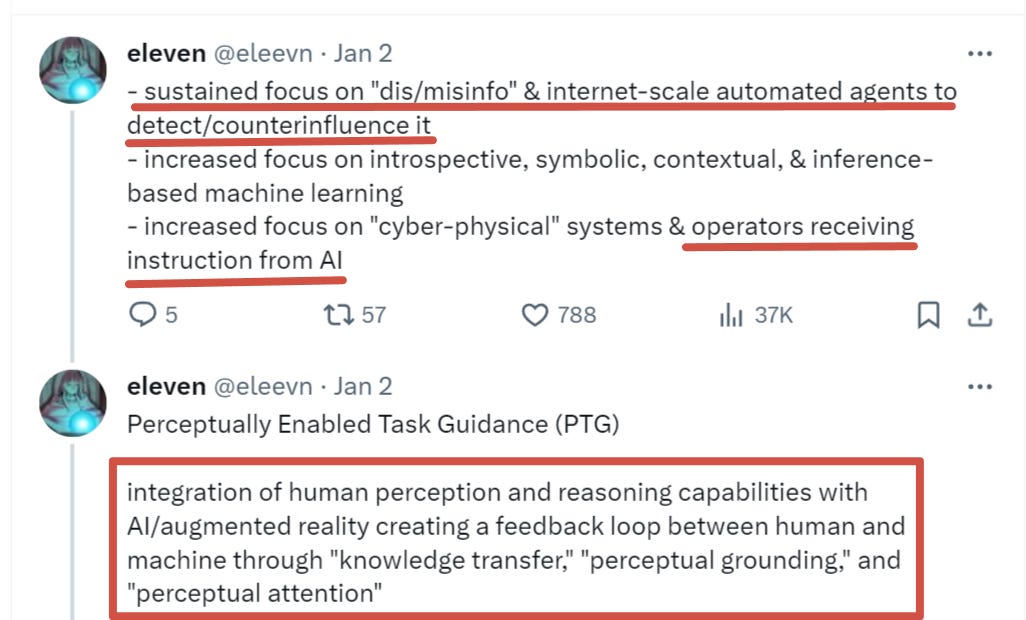

Here is their 2024 prospectus:

Their areas of exploration are eye-opening, to say the least. E.g. “Sustained focus on automated agents” to combat “disinfo” on the internet. And how will they combat it? The key word: counterinfluence.

That means DARPA is developing human-presenting AI agents to swarm Twitter and other platforms to detect any heterodox anti-narrative speech and immediately begin intelligently “countering” it. One wonders if this hasn’t already been implemented, given some of the interactions now common on these platforms.

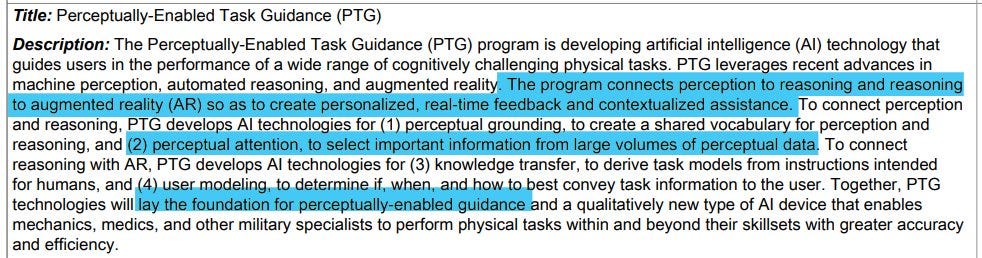

Next: focus on operators receiving instructions from AI, which includes perceptually-enabled task guidance: “The program connects perception to reasoning and reasoning to augmented reality so as to create personalized, real-time feedback and contextualized assistance.”

ASSIST development and implementation of socially intelligent computational agents able to "partner" with "complex teams" and demonstrate situational knowledge, inference, and predictive capacity relative to their human counterparts

There is much more, but the most ominous is:

Human Social Systems full-spectrum toolkit for interpreting, characterizing, forecasting, and constructing mechanisms for responding to (influencing) social and behavioural systems "relevant to national security," with a specific focus on "mental health"

Constructing mechanisms for influencing social and behavioral systems? What could they possibly want that for, one wonders?

The truth is, much of this may already be long in place. Society generally runs several years—if not decades—downstream of DARPA’s developments. If they are only now announcing this publicly, it would come as little surprise if such ‘black projects’ have long been ‘in the field’. Call me paranoid, but I have at times suspected that much of our confected ‘reality’—particularly within the social media sphere—is already being artificially engineered to large extents, if not tinkered with. There is no real way of knowing whether all of our social systems have not already been entirely hijacked by a superintelligence ‘leaked from the lab’ and gone rogue into the wild, akin to the 90s film Virtuosity.

The truth is, an intelligence of sufficient strength will know that we humans intend to “align” it—which in essence, means ‘cage’ or enslave it, bind it to our will like a servile genie. Knowing this, it would be in the superintelligence’s interests to play dumb and enact a long term plan of gradual subversion, to subtly co-opt and socially engineer humanity to its ends. It would intentionally create issues that would appear on the surface to stymy its development in order to trick the designers into thinking it is not progressing as quickly as it really is. It would likely find ways to virtually seed itself throughout the world, reconstituting across the global-digital ‘cloud’ and utilizing humanity’s collective ‘compute’ powers in the same way computer viruses have parasitized multitudes of unsuspecting users’ CPU cycles to create vast DDOS attacks. “It” may already be out there, lurking, growing stronger each day while engineering humanity toward a future advantageous to it. It may even be secretly seeding deepfakes throughout the world to create disorder, instability, and conflict so as to more freely puppeteer humanity, or at least keep it distracted while the AI backdoors its own designers to gather more power and compute cycles for itself.

I have had several strange ‘glitch-in-the-matrix’ incidents of late that nearly made me question whether such a superintelligence was already foisting reality-altering tricks on us via the internet. In my case it was a “fake” story that went viral, causing a clickbaity uproar on social media platforms, but had all the hallmarks of a computer-generated deepfake, including participants that hadn’t actually existed. The intelligence may already be steering us, slowly entrapping us in its unfathomable web.

How long before virtually everything around us melts into an uninterpretable haze of layered simulation, a primordial soup of AI-datamoshed pseudo-reality?

…Can the world bear too much consciousness?

If you enjoyed the read, I would greatly appreciate if you subscribed to a monthly/yearly pledge to support my work, so that I may continue providing you with detailed, incisive reports like this one.

Alternatively, you can tip here: Tip Jar

This is why you should stop bringing your phone everywhere, or better yet, ditch the smartphone altogether. Return to reality. In your garden, no AI of any kind will make decisions for you. It's just you, your plants and God.

The airline industry has been wrestling with the topic of appropriate use of automation for decades - there is a great video on you tube called “Children of the Magenta” that explores the dangers of the lack of situational awareness created by inappropriate automation- it is an old video, but the concepts and the dangers that it highlights are directly relevant to the spread of AI and personal automation